Creating a book search engine

— Data, Theory

In this post

Implementing a search engine, studying what solutions exist and trying to bring something new can be quite challenging, which is why I'll talk about my thinking and research process while creating a book SE. All the content comes from a paper I had a to turn in at Tsinghua University (清华大学).

Introduction

Understanding the goal

I used to read a lot when I was younger, mainly fantasy stories with sorcerers, incredible beasts and people saving the world without anyone ever knowing about it. I loved starting books, finishing them, and thinking about the adventures I just had, however, I always wanted more, I always wanted a new book, a new hero, a new story to read through. Therefore, I was continuously searching for books related to the one I just read. How does one do that? How do you simply find a book which suits your need, without asking three different librarians, who might redirect you to books you already know.

This was my project: being able to search for books, based on their title, their reviews, their author or their publisher. In other words, being able to construct and visualize the relationship network of a book, and thus, making a suggestion based search engine.

Two models, one implementation

Without diving into the details, I encountered far too many problems with my first idea to be able to go through with it. My first model will be detailed in the next parts of this report, how I imagined it and the difficulties I had, forcing me to change my initial idea to a simpler one, but not less challenging.

While working on the first model, I have had the need to use well-known book APIs to manipulate huge data of books and entire libraries. It is then that I realized those APIs (Amazon, Google, or custom made) were not returning the results I expected. This is how I made the choice to tackle this problem by creating a search engine able to predict what the user wants, and what his query is searching for. Having given up on the suggestions part, this search engine was made for precision, and is, in the end, nothing more that an abstracted API, which can be used by anyone else.

This is how and why I will, in this report, talk about my suggestion model (not implemented), and my precision model and its implementation.

Related Work

Suggestion model

While some websites might say otherwise [1], as explained in the next section, real suggestions in search engines do not exist, they are mere predictions. There are multiple reasons for this, which I will not explain here as it would demand a far longer report, but the easy way to look at it is: too much work and resources for uncertain improvements. Binary trees, graphs and machine learning are the main ways to create real suggestions, but all of them require a huge amount of data and time to start being efficient, which is one of the reason I could not implement this model.

Precision model

Google uses a prediction service to help complete searches and URLs typed in the address bar: these suggestions are based on related web searches, your browsing history, and popular websites. If your default search engine provides a suggestion service, the browser might send the text you type in the address bar to the search engine.[2]

However, an important thing to note is that Google (and other search engines) does NOT suggest anything, these are merely predictions based on past searches. While it works very well in most cases and reduces typing by up to 25 percent, it does not work when making requests[3]. Indeed, imagine wanting to use an API, or letting a user search for a book on your platform, how can you return a precise result without trying to predict what the user wants? This is what a search engine is all about: understanding the request, knowing the user, returning data. The reason why this is so much important is explained in Results.

Model

Suggestion

My goal in order to suggest related books to the one that was just read, was to construct the relationships network of a book. I mean by that, being able to link books together thanks to what defines them (their story, their author, title, publisher, etc...). However, in order to do that, we first need to understand the way their attributes interact with each other, I will use the well known Harry Potter series to explain this.

Title

The way titles link to each other between books is quite counter intuitive. It is easy and natural to think that in a book series, all the books would form a strong link in our graph, and other less relevant titles would be scattered around them. However, for a title "X", the second volume is rarely named "X number 2", in the case of Harry Potter, we get "Harry Potter and the philosopher's stone" then "Harry Potter and the chamber of secrets". Notice how the linking string Harry Potter is not the dominant one? If you add to this effect the fact that "Harry Potter and the cursed child" or "Harry Potter the complete collection" exist, your graph gets messed up really fast. Which is why you need to isolate part of the string, and compare it to other titles, giving you the following network schema:

Author

The author relationships is more straightforward, authors have in most cases written a certain number of books, more popular than others, defining how important they are in the graph. If you take the example of J.K Rowling, Harry Potter books are best-sellers, where the cursed child or Hogwarts: A History were not sold as much. Following this idea we can construct a network where each book is defined by a threshold of importance linked to the author:

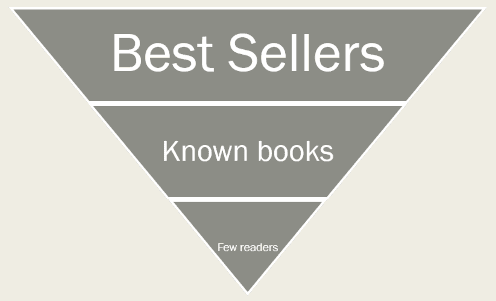

Publisher

Similarly to an author, publishers contain many books and get successful relatively to their published book success. Therefore, it makes sense to divide a publisher database into categories where best-sellers are first, and unknown books last, giving us a hierarchy looking like this:

Implementation

The idea for this model was to use all the previous schemas to construct three relationships graphs, and navigate through them thanks to the user input. The way it would have been different from simple prediction, is that instead of only predicting Harry Potter 2 from Harry Potter 1, it would also link the title to the author's other works and the publisher's library. We could also imagine creating tagging graphs (linking books that contain the word "magic", "wizard", "fantasy", "ghosts" and so on together), or a surprise me function to extend on purpose the request's reach in our network.

As said previously, because of technical difficulties and problems explained in Results, this has never been implemented, but I still think the idea is good, and would like to make something out of it one day.

Precision

The precision model is far simpler than the suggestion model, but requires a lot of tweaks to make it work correctly. The goal of the model is to predict what the user will ask for before he is asking for it, most search engines do this by caching previous requests but I tried taking it one step further. My assumption is as follows: The majority of requests is about a minority of books, meaning that most of the time, we all search for and re-read the same few books, which usually are best-sellers or those getting the best reviews. How many times have I myself searched for Harry Potter, the Lord of the Rings or The little prince without once trying to get information on lesser known books I own ?

In order to prove my point I did a small simulation. I chose two best-sellers (The Hobbit and The Da Vinci Code)[4] and compared their trends on google search worldwide. Without any surprises, their interest over time look quite similar and none of them seriously takes the lead[5].

I then took one of the book I enjoyed recently: What if from Randall Munroe (not a huge hit, lots of sales, very good reviews on Amazon, in English) and compared it to The Da Vinci Code. Well, even though What if is far from being unknown, it gets completely crushed under the weight of The Da Vinci Code's popularity[6] (making me wonder about the shadow effect popularity can have in computer science, but that is a question for another time).

Knowing that, I had decided to create a request endpoint using a simple method that proved to be incredibly efficient, but first I needed data, and more precisely a list. This list that I constructed thanks to crawlers was a ranking of the most well-known existing books, from most popular to least popular, I cross-referenced data in order to get the many existing title / author and publisher texts formatting (in order to compare it to the incoming request) and indexed all the results. In order to add precision I could have added amazon reviews, trends, new books coming out, movie adaptations and so on, but time was in my way and I wanted my demo to be a working Proof Of Concept.

Once my database was filled, I just needed to follow this workflow:

-

Receive the request, sorted by Title / Author / Publisher thanks to a tagging system

-

Scan my database for results that might match the request. All the results found get a priority of one.

-

Send the initial request to Google Book Api and get data about all the books my database does not know about. Those results get a priority of two.

-

Send back a list of found books, sorted by increasing priority.

As straightforward as it sounds, this system proved to be efficient in most cases, falling back onto Google Book Api when needed, in order to find smaller books. An interesting thing I noticed while testing, is that usually, when searching for lesser known things, the user knows what he is searching for, and thus, usually enters the full title of the book (whereas I have never seen someone type the full "Harry Potter and the philosopher's stone" title into google). This detail changes a lot how the search engine behaves, because it does not need to "guess" what the user is searching for anymore, but only needs to get what matches the request's string.

Results

The problem with existing APIs

In order to understand the problems my model is trying to fix and to compare my results, here are the first book titles returned by Google and Amazon, compared to the user's request.

| Request | Google Books Api | Amazon.com book section |

|---|---|---|

| The Da Vinci | The Truth Behind the Da Vinci Code | Leonardo Da Vinci |

| Dan Brown | Angels & Demons | Robert Langdon Series [...] |

| Harry Potter and the | Harry Potter and the Classical World | Harry Potter Paperback Box Set |

| Harry Potter 2 | How to Draw Harry Potter 2 | Harry Potter and the Chamber of Secrets |

In these eight tests, there is only one where the user gets as first result what he really wants, even though these searches are aimed at books, and look clear to a human.

When testing for Dan Brown, a lot of his books were returned before the Da Vinci Code, which made me wonder: had I made a mistake ? Maybe the trend generated by new books coming out was important enough to overtake old best-sellers... Full of doubt about my work and my assumptions, I went back to trends.google.com and compared The Da Vinci Code to Angel & Demons (returned by Google Book API) and Origin (Dan Brown's last book) over the last year. Conclusion: they do not even come close to the former, the APIs I was using were definitely using an research algorithm that did not suit my needs.

Demo

You obviously were not here for my demo, you'll just have to imagine it.

My demo implementation was made using Flutter, which is a frontend framework made in Dart, and node.JS for the crawling part. As shown during the presentations done during class time, my platform is composed of a search bar and three tags that can be selected (Title, Author and Publisher), defining what I will be searching for once the user presses enter. Once the workflow defined previously is applied and I have my list of books, I display them in a scrolling view for the user to see, and select the one he is looking for. The platform then opens a Dialog containing more information about the book, like its published date, description, publisher, and so on.

Efficiency

I decided to make two comparisons of results returned by my implementation and Google Books API. The three book titles listed below are the three top results returned in the list.

-

"Harry Potter 2"

Precision model Google Books API Harry Potter and the Chamber of Secrets How to draw Harry Potter 2 Harry Potter Instrumental Solos (1-5) LEGO MINIFIGURE HARRY POTTER 2 How to draw Harry Potter 2 Harry Potter and International Relations -

"Harry Potter and the"

Precision model Google Books API Harry Potter and the Deathly Hallows Harry Potter and the Classical World Harry Potter and the Order of the Phoenix Harry Potter and the Bible Harry Potter and the Prisoner of Azkaban The Ultimate Harry Potter and Philosophy

What is interesting to note, is that by using my model, I do not, in any way, add a negative search effect to the returned data. What I mean by that is that even though my method is limited in its efficiency, it does not have any unwanted side effect: when searching for "Harry Potter 2", even if the user wants to find "How to draw Harry Potter 2", getting "Harry Potter and the Chamber of Secrets" is expected and defined as normal behavior by the user. As weird as it sounds, returning what the user is not looking for, sometimes is the best way to be fault-proof.

Conclusion

I have learned a lot about search engines suggestions and predictions in this project, and have a far better understanding of how everything works on the backend side. As frustrating as it might be, the conclusion I have come to, is that no search engine is perfect. In order to be more precise, or more suggestive to the user, you need to take a stand about how you interpret the request, and what you want to return to the user. The same book search engine can not be perfect for best-sellers and lesser known books at the same time, it is all about tradeoff. However, a middle ground exists, and is used by Google, Baidu, Sogou, Bing: use what other users have searched for (explained in Suggestion model). In the end, if you want an API or a search engine suiting your needs, you need to modify the way you order your results, if you have the data, it is all about finding a smart way to sort it compared to the request.